Researchers at the Massachusetts Institute of Technology (MIT) have developed a groundbreaking technique that significantly enhances the training of multipurpose robots by integrating data from various sources.

This method, called Policy Composition (PoCo), utilizes generative AI models, specifically diffusion models, to learn from diverse datasets and enable robots to perform a broader range of tasks more effectively.

Challenges in Traditional Robotic Training

In traditional settings, robots are often trained with data from a single source, limiting their ability to function in environments or tasks not covered during their training.

Typical robotic training datasets may include color images or tactile imprints from specific tasks like packing items in a warehouse setting. However, each dataset usually reflects a narrow task spectrum and environment, which hampers the robot’s ability to adapt to new and varied tasks.

Using Integrated Training Approach

The MIT team’s approach involves training separate diffusion models on distinct datasets. Each model develops a strategy, or policy, tailored to a particular task using specific data. For example, one model might learn from human video demonstrations, while another might use data from teleoperated robotic arms.

These individual policies are then synthesized into a general policy that enables the robot to handle multiple tasks across different settings.

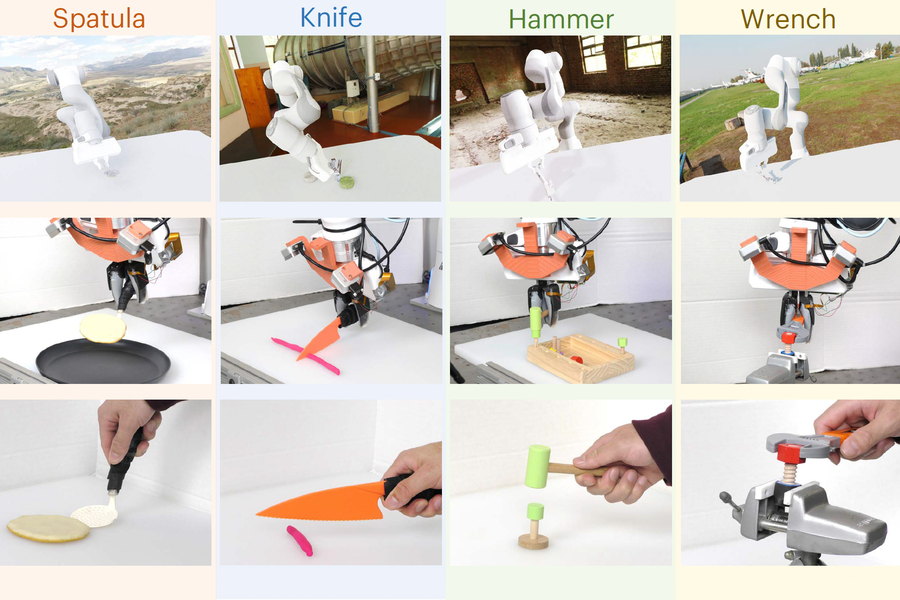

This composite training method has shown a 20 percent improvement in task performance compared to baseline training techniques. In practical applications, it has allowed robots to adeptly use tools like hammers and screwdrivers and adapt to tasks they were not specifically trained for, such as flipping objects with a spatula or using a wrench.

The concept of PoCo builds on previous work in Diffusion Policy, which was introduced by researchers from MIT, Columbia University, and the Toyota Research Institute. This earlier work laid the groundwork for using diffusion models to refine and enhance the trajectories of robots, a technique that involves adding noise to the trajectories in training datasets and then gradually removing it to fine-tune the robot’s actions.

The practical implementation of PoCo in simulations and real-world experiments has demonstrated the ability of robots to perform complex tool-use tasks with improved accuracy and adaptability. The technique’s potential extends beyond current applications to more complex scenarios where a robot might need to switch between different tools or tasks, underscoring its versatility and robustness.

Future directions for this research include applying the technique to long-horizon tasks that require sequential tool use and integrating even larger and more varied datasets to enhance performance further. The overarching goal is to create robots that can not only perform a diverse array of tasks but also adapt dynamically to new challenges.

Who Supports This Research?

This research has garnered support from various institutions, including Amazon, the Singapore Defense Science and Technology Agency, the U.S. National Science Foundation, and the Toyota Research Institute. It reflects a significant step forward in robotic training and is poised to have wide-ranging impacts on how robots are deployed in various industries.

The findings from this study are scheduled to be presented at the upcoming Robotics: Science and Systems Conference.

Related News:

Featured Image courtesy of the researchers