Samsung Electronics, once the dominant force in memory semiconductors, is now struggling in the AI-driven chip market, resulting in a $126 billion loss in market value, as reported by S&P Capital IQ. Despite its early leadership, Samsung has fallen behind its competitor SK Hynix in developing high-bandwidth memory (HBM), an essential component for AI applications heavily utilized by Nvidia.

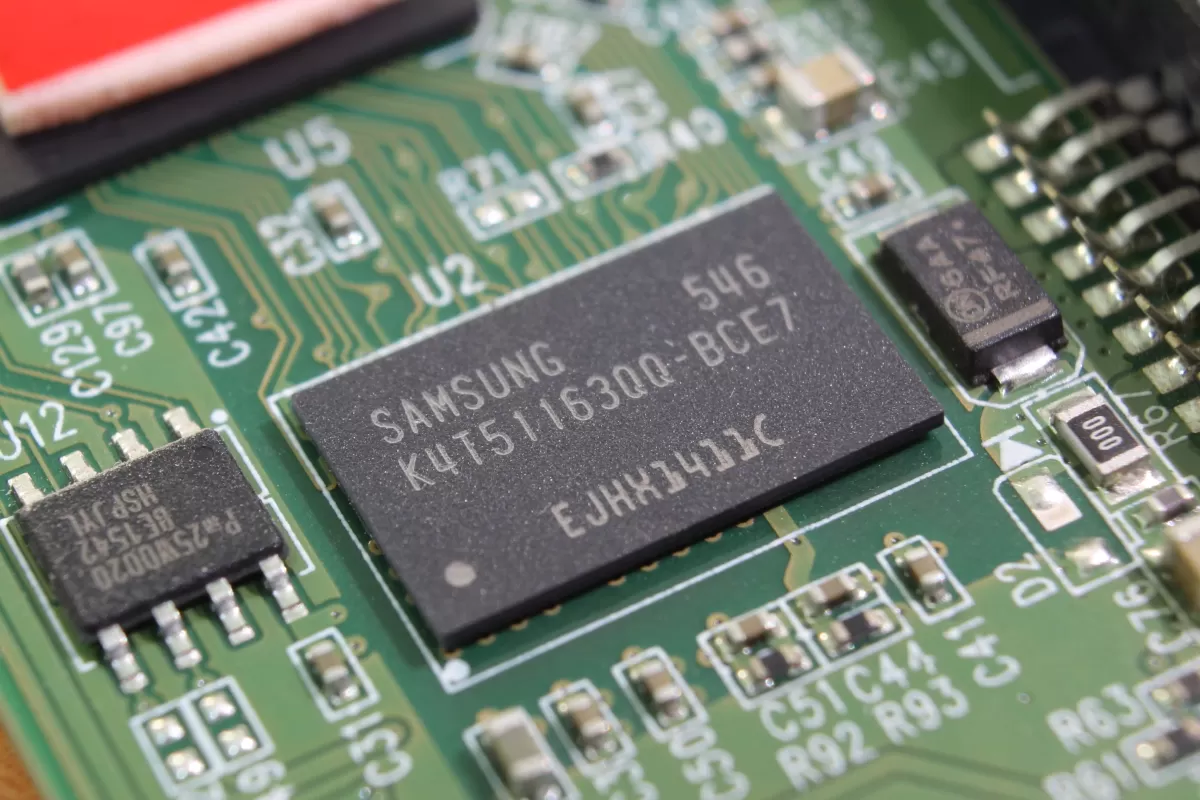

Memory chips, integral to a variety of devices like smartphones and laptops, have long been Samsung’s stronghold, surpassing rivals SK Hynix and the U.S.-based Micron. However, the rise of AI applications, such as ChatGPT from OpenAI, increased the demand for high-performance GPUs, where Nvidia has become the top player, setting new standards in AI training infrastructure. Central to Nvidia’s success is the high-bandwidth memory that powers its GPUs. HBM, which involves stacking dynamic random access memory (DRAM) chips, was once a niche market. Samsung’s decision not to prioritize HBM investment left it lagging as the AI boom expanded.

Kazunori Ito, director of equity research at Morningstar, explained that Samsung’s limited focus on HBM stemmed from the high costs and initially small market size. “Due to the difficulty of the technology involved in stacking DRAMs and the small size of the addressable market, it was believed that the high development costs were not justified,” Ito commented.

SK Hynix, by contrast, invested heavily in HBM development, positioning itself as a preferred Nvidia partner. SK Hynix’s rapid launch of HBM chips led to their integration into Nvidia’s architecture, strengthening ties with the U.S. tech giant. Notably, Nvidia’s CEO personally requested SK Hynix to expedite production of its next-generation chip, underscoring the importance of HBM for Nvidia’s product lineup. This strategic advantage contributed to SK Hynix’s record quarterly operating profit in the latest quarter.

Brady Wang, associate director at Counterpoint Research, highlighted SK Hynix’s success, attributing it to robust R&D investments and partnerships within the industry, which have given the company a leading edge in both HBM innovation and market presence.

In response, Samsung stated that its HBM sales rose by over 70% in the third quarter, attributing the growth to its current HBM3E products. The company also noted that it plans to begin mass production of HBM4 in the latter half of 2025, aiming to regain its footing in the market. Despite this, analysts point out that Samsung’s ability to recover in the short term heavily depends on its partnership with Nvidia. Approval from Nvidia, which commands over 90% of the AI chip market, is essential for Samsung to compete with SK Hynix in the AI-driven chip sector.

Samsung has made strides in qualifying for Nvidia’s HBM supplier list, with a spokesperson stating that the company achieved “meaningful progress” in HBM3E’s development and has completed a significant phase in Nvidia’s qualification process. Samsung expects to boost sales by the fourth quarter of the year.

Wang also noted that Samsung’s expertise in research and development, combined with its extensive semiconductor manufacturing capacity, could support the company in catching up with SK Hynix if these strengths are leveraged effectively.

However, analysts remain cautious about Samsung’s ability to close the gap quickly, with Ito noting that the company’s delayed investment in HBM and lack of first-mover advantage remain key hurdles.

Featured image courtesy of Asia Fund Managers

Follow us for more updates on Samsung’s AI strategy.