Roblox has rolled out a series of updates aimed at improving safety for its youngest users and giving parents more control over how their children use the platform.

The updates, announced recently, includes stricter messaging policies for users under 13 and it is making it easier for parents to manage account settings and monitor activity remotely. These updates come in response to mounting criticism of Roblox’s safety practices, including reports of predatory behavior and a class-action lawsuit.

Tighter Controls on Messaging for Younger Players

Children under 13 will no longer be able to send private messages outside specific games or experiences. They can still communicate publicly within games, but private messaging is now restricted unless parents actively enable it.

Roblox had already limited access to certain types of content for this age group, such as unrated experiences and games designed for social interaction or free-form creativity. These new rules further tighten restrictions on how younger users can interact on the platform.

Remote Controls for Parents

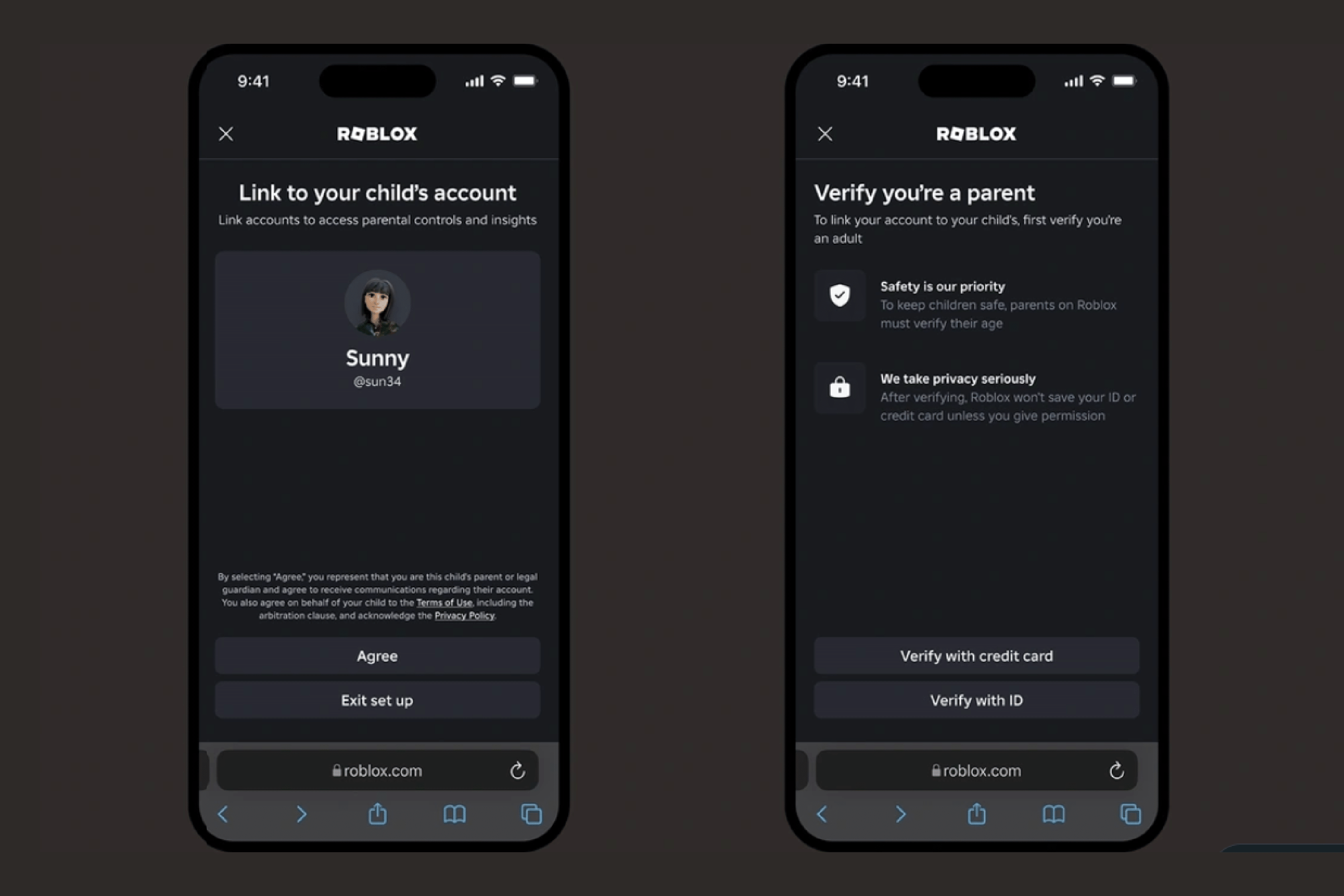

To give parents more control, Roblox is introducing a remote management feature that lets them oversee their child’s account through their own device.

Parents can now link their account to their child’s, enabling centralized control over settings such as screen-time limits, friend lists, and requests to access games with specific maturity ratings. Activating these controls requires parental identity verification through a government ID or credit card.

Growing Concerns Over Platform Safety

Roblox has faced heavy scrutiny over its handling of child safety, particularly after reports of predatory incidents involving its users. A Bloomberg investigation highlighted multiple cases, including a 15-year-old girl who was groomed and abducted by a developer she met on Roblox. These incidents have led to calls for stronger safeguards, with some advocates criticizing the platform for being too slow to act.

In response, Roblox says it has implemented more than 30 safety improvements in 2024. These include updated content labeling, which replaces basic age ratings with detailed maturity descriptors. For example, users under 13 are blocked from accessing games designed for socializing with strangers or activities involving free-form user-generated content. To access the most mature experiences, users must verify their age and declare themselves 17 or older.

The company has collaborated with organizations such as the Family Online Safety Institute and the National Association for Media Literacy Education to develop its safety tools. Stephen Balkam, CEO of FOSI, described the updates as a meaningful step forward, emphasizing that these features provide parents with effective but non-intrusive monitoring options.

Matt Kaufman, Roblox’s chief safety officer, stated that the updates are part of an ongoing commitment to safety rather than a reaction to specific incidents. The company continues to refine its policies to balance creative freedom with protecting its most vulnerable users.

Featured Image courtesy of Roblox

Follow us for more tech news updates.