Google DeepMind announced the launch of Gemini Robotics On-Device, a language model designed to run locally on robots without the need for an internet connection. This update builds on the Gemini Robotics model introduced in March and allows robots to perform complex tasks through direct control of their movements.

Developers can fine-tune and direct the robot’s actions using natural language prompts. Google claims that Gemini Robotics On-Device performs nearly as well as its cloud-based predecessor and outperforms other unnamed on-device models in benchmark tests.

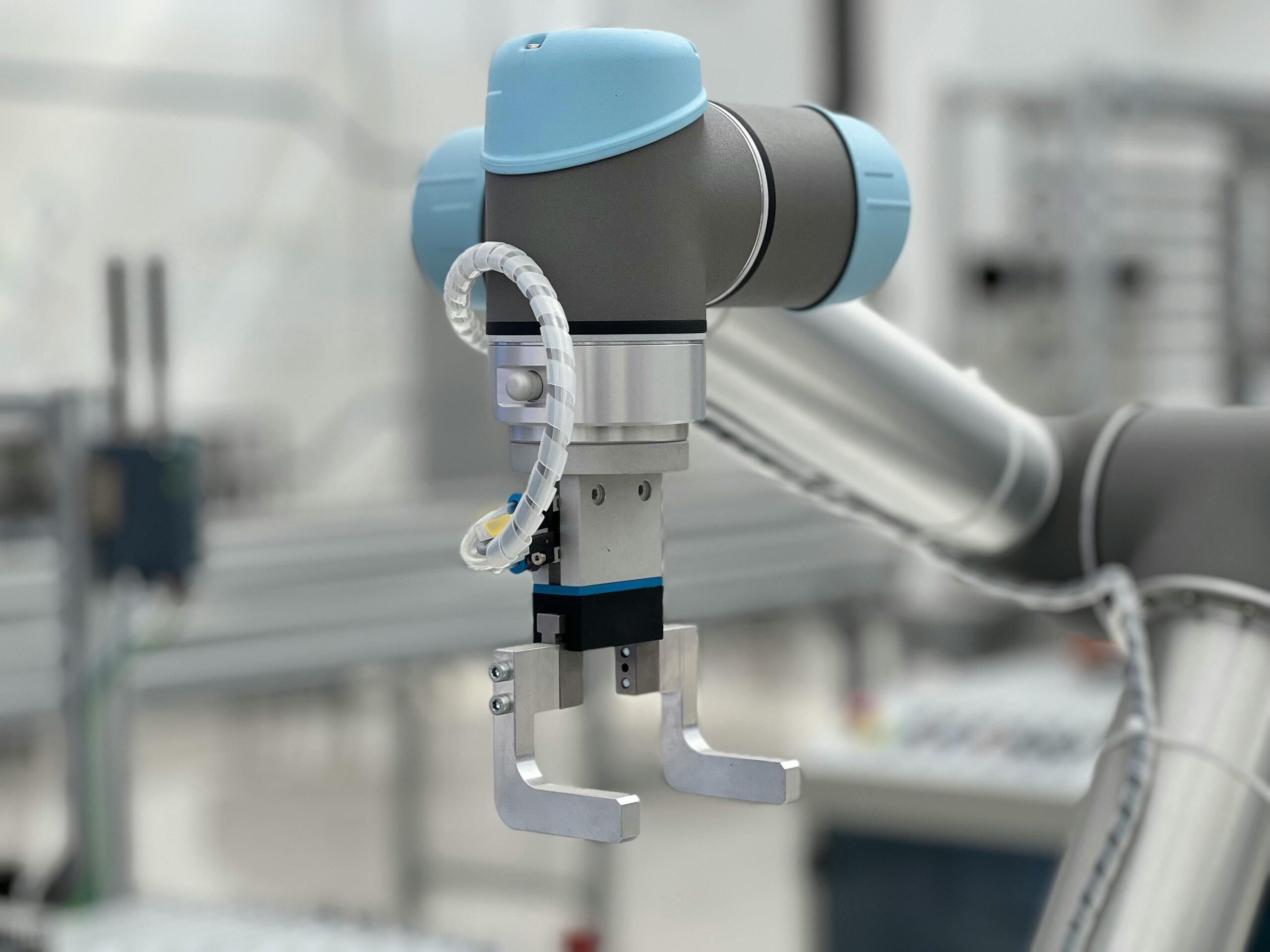

Demonstrations Show Robots Performing Real-World Tasks

In demonstrations, robots equipped with the new model performed activities such as unzipping bags and folding clothes. Originally trained for ALOHA robots, the model has been adapted to operate on the bi-arm Franka FR3 and Apollo humanoid robots by Apptronik. The Franka FR3 notably handled new tasks like industrial belt assembly without prior exposure.

Google is also launching a Gemini Robotics SDK, allowing developers to train robots on new tasks by providing 50 to 100 demonstrations using the MuJoCo physics simulator. Other players are entering the robotics AI space, including Nvidia’s humanoid foundation model platform, Hugging Face’s open robotics models and datasets, and Korean startup RLWRLD focusing on foundational robot models.

What The Author Thinks

Running AI models directly on robots without internet reliance marks a key shift toward faster, more reliable, and more secure robotic applications. This local approach will unlock new possibilities in industries where connectivity is limited or latency is critical, pushing robotics from controlled labs into real-world environments faster than before.

Featured image credit: KJ Brix via Pexels

For more stories like it, click the +Follow button at the top of this page to follow us.