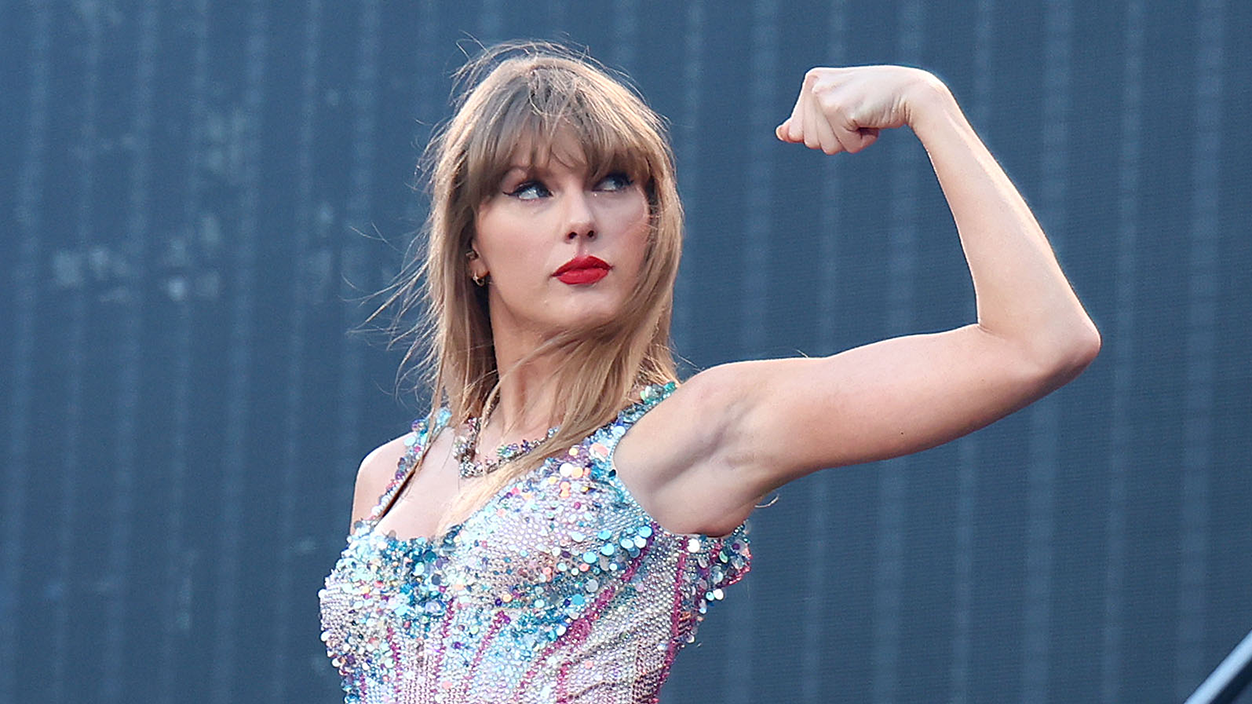

Taylor Swift endorsed Vice President Kamala Harris on Tuesday, September 10, using the occasion to warn about the increasing role of artificial intelligence (AI) in spreading misinformation during political campaigns.

This came after Donald Trump circulated doctored images of Swift dressed in patriotic attire, with the caption, “Taylor Swift Wants You to Vote for Donald Trump,” while another showed crowds of women wearing “Swifties for Trump” shirts. The incident highlighted the growing threat of AI-generated deepfakes in political settings, creating misleading content that can confuse voters by fabricating celebrity endorsements or impersonating opponents.

Federal Regulators Struggle to Address AI in Campaigns

Despite rising concerns about the misuse of AI, especially as the 2024 U.S. presidential election approaches, there are currently no federal regulations that specifically address its application in political campaigns.

On Tuesday, the Federal Election Commission (FEC) confirmed it would not be voting on a proposed rule that would govern AI use in political ads before the upcoming election. FEC Chairman Sean Cooksey stated that “deceptive campaign ads” are already prohibited under the Federal Election Campaign Act, regardless of whether AI is involved. However, this general guidance leaves many unanswered questions about how deepfakes will be managed in the upcoming election cycle.

The Federal Communications Commission (FCC) is stepping in by proposing that political ads disclose any use of AI, but these rules would only apply to broadcast and telephone communications. Social media posts, like the ones Trump shared, fall outside the FCC’s regulatory scope.

Lisa Gilbert, co-president of the advocacy group Public Citizen, remarked that while her organization is pushing for AI regulation in elections, much of the effort feels experimental, likened to “throwing everything at the wall to see where things will stick.” The ultimate goal remains the establishment of meaningful federal legislation to regulate AI in political contexts.

States Move Ahead with Deepfake Legislation

Though federal rules on AI are absent, some states are moving ahead with their own laws. More than 20 states have enacted or are considering deepfake legislation, with California Governor Gavin Newsom backing measures that would ban AI-altered political ads. This came in response to Tesla CEO Elon Musk’s sharing of an altered video featuring Kamala Harris earlier this year.

While it remains unclear how many people were influenced by Trump’s deepfake of Swift, her real Instagram endorsement of Harris saw significant engagement. According to the U.S. Government Services Administration, Swift’s post drove over 330,000 people to register to vote. In her post, Swift expressed her fears about AI’s potential to misinform voters, stressing that the best way to counteract false narratives is by sharing the truth. She also announced her support for Harris and her running mate, Tim Walz, citing their commitment to LGBTQ+ rights and reproductive care.

Gilbert noted that while celebrities like Swift can swiftly dispel false information to their large audiences, most Americans do not have the same platform or influence. This makes AI-generated deepfakes particularly dangerous for the average person, whether they are a politician in a local race or an ordinary social media user.