Some artificial intelligence systems are falling for the same optical illusions that trick human vision, a finding that researchers are using to test theories about how the brain processes information and why people perceive the world in ways that do not always match physical reality.

Why Optical Illusions Matter

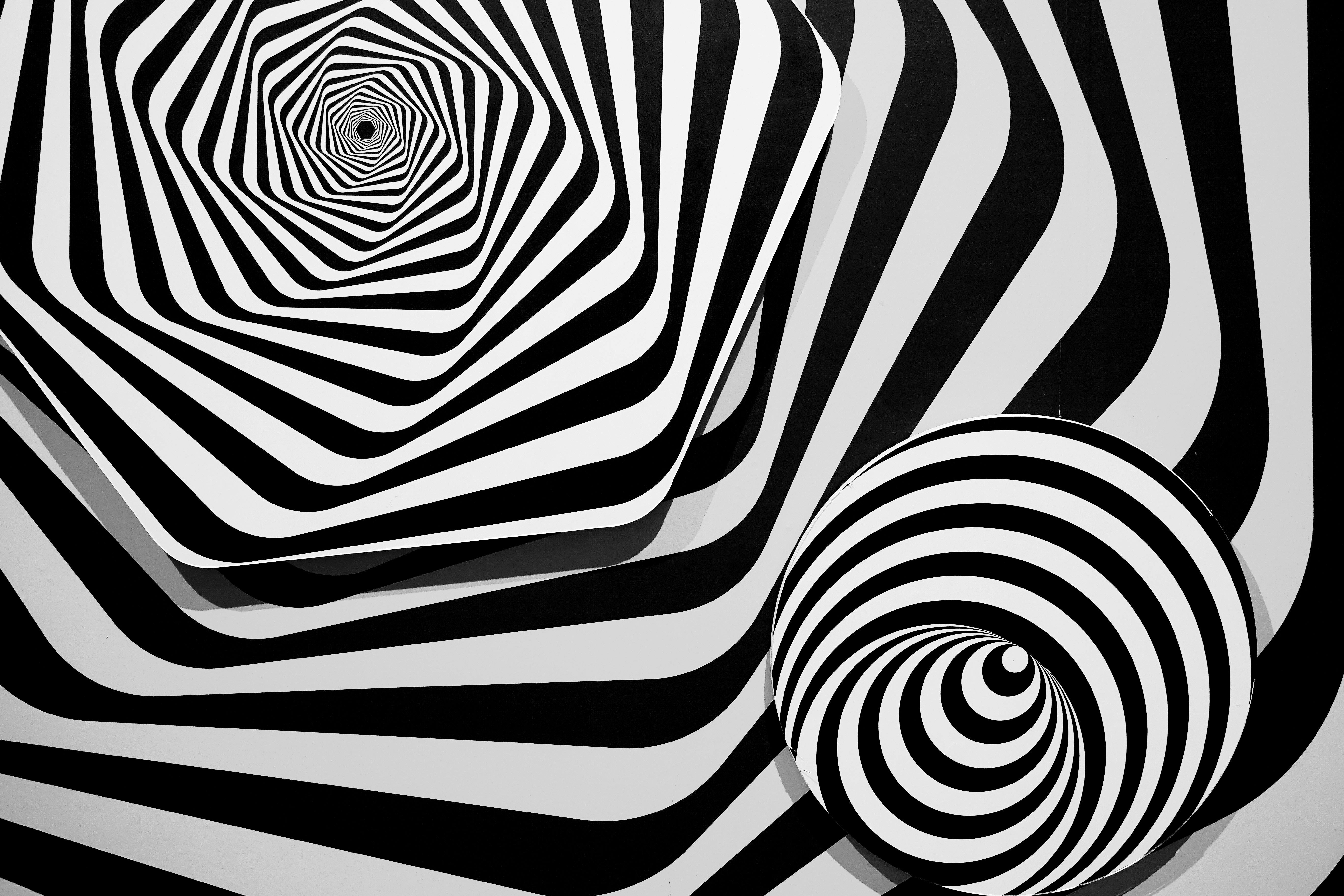

Optical illusions, such as the Moon appearing larger near the horizon or static images that seem to move, show that human perception does not always reflect the physical properties of the world. Rather than being simple mistakes, these effects reveal how the brain uses shortcuts to extract useful information from complex visual scenes. Since the brain cannot process every detail, it selects and predicts what matters most.

Some deep neural networks, which are the systems behind many modern AI tools, have been found to react to these illusions in ways similar to humans. That overlap is now being used by scientists to study the underlying rules that guide perception.

Using AI To Test Vision Theories

Eiji Watanabe, an associate professor of neurophysiology at Japan’s National Institute for Basic Biology, said deep neural networks allow researchers to test ideas about visual processing without ethical limits that apply to human experiments. In one study, his team used a model called PredNet, which is based on predictive coding, a theory that the brain anticipates what it expects to see and then adjusts based on incoming information.

PredNet was trained on around one million frames of natural scenes recorded by head mounted cameras and was never shown optical illusions during training. When the researchers later presented it with versions of the rotating snakes illusion, where a still image appears to spin, the system reacted to the same images that fool human viewers. It was not misled by altered versions that humans also perceive as static.

Watanabe said this supports the idea that both the brain and the model rely on predictions of motion when interpreting certain visual patterns.

Where AI And Human Vision Differ

The researchers also found differences. When people focus their eyes on one part of the rotating snakes illusion, the movement often seems to stop in that spot while continuing in peripheral vision. PredNet, however, perceived all parts of the image as moving at the same time. Watanabe said this is likely because the model lacks an attention mechanism and processes the entire image uniformly.

He added that no existing neural network experiences the full range of illusions seen by humans, and that although AI may appear to behave like people in some tasks, its internal structure remains very different from the human brain.

Quantum Inspired Models Of Perception

Other researchers are exploring whether ideas from quantum mechanics can help model ambiguous illusions. Ivan Maksymov of Charles Sturt University in Australia built a deep neural network that uses quantum tunnelling to interpret figures such as the Necker cube, which flips between two visual orientations, and the Rubin vase, which can be seen either as a vase or as two faces.

When these images were fed into the system, it alternated between interpretations over time in a way that matched how human perception shifts. Maksymov said the timing of those switches was close to what people show in experiments.

He said this does not mean the brain works through quantum physics, but that quantum based models may better describe how people choose between competing interpretations, an approach known as quantum cognition.

Implications For Space And Human Vision

The same modelling approach could help study how perception changes in space. Research on astronauts has shown that after months aboard the International Space Station, they no longer favour one perspective of the Necker cube over another, unlike on Earth where gravity helps guide depth perception.

Maksymov said this may be because, without gravity, cues based on eye height and body orientation are altered. Understanding these changes could matter for long term human space travel, where reliable perception is important.

Featured image credits: Pexels

For more stories like it, click the +Follow button at the top of this page to follow us.