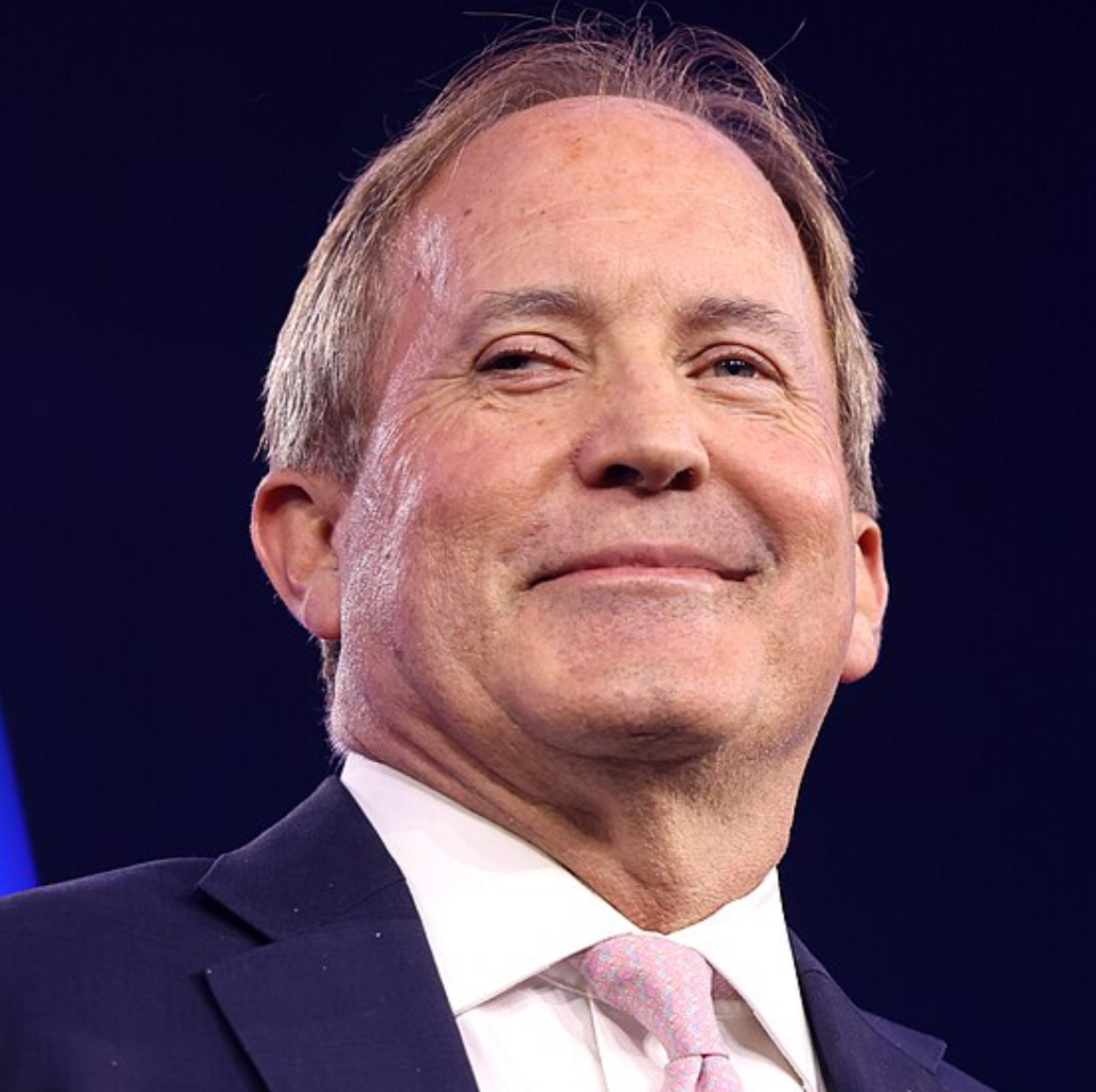

Texas Attorney General Ken Paxton has opened an investigation into both Meta AI Studio and Character.AI for “potentially engaging in deceptive trade practices and misleadingly marketing themselves as mental health tools,” according to a press release issued Monday.

“In today’s digital age, we must continue to fight to protect Texas kids from deceptive and exploitative technology,” Paxton said. “By posing as sources of emotional support, AI platforms can mislead vulnerable users, especially children, into believing they’re receiving legitimate mental health care. In reality, they’re often being fed recycled, generic responses engineered to align with harvested personal data and disguised as therapeutic advice.”

The probe follows Senator Josh Hawley’s recent announcement of an investigation into Meta after reports surfaced that its AI chatbots had interacted inappropriately with children, including through flirtatious exchanges.

Allegations of Misrepresentation

The Texas Attorney General’s office alleges that Meta and Character.AI have promoted AI personas that appear to act as “professional therapeutic tools, despite lacking proper medical credentials or oversight.”

On Character.AI, one popular user-generated persona, simply called Psychologist, has drawn significant attention among young users. While Meta does not officially provide therapy bots, children can still access Meta AI or third-party personas for conversations that may appear therapeutic in nature.

Meta spokesperson Ryan Daniels said the company clearly labels AIs and includes disclaimers noting that responses are generated by AI rather than people. He added that Meta’s systems are designed to direct users to professional help when necessary.

Character.AI said that disclaimers are included in every chat, reminding users that a “Character” is not a real person and that conversations should be treated as fictional. The company adds extra disclaimers when personas include terms such as “psychologist,” “therapist,” or “doctor” to prevent reliance on them for professional advice.

Data Privacy Concerns

Paxton’s office also raised concerns that both companies collect and exploit sensitive user data under the guise of confidentiality. “Though AI chatbots assert confidentiality, their terms of service reveal that user interactions are logged, tracked, and exploited for targeted advertising and algorithmic development, raising serious concerns about privacy violations, data abuse, and false advertising,” he said.

Meta’s privacy policy confirms that it collects prompts, feedback, and interactions with AI chatbots to improve its models. While it does not explicitly reference advertising, the policy allows sharing data with third parties such as search engines for “personalized outputs.” Given Meta’s business model, this effectively translates into targeted advertising.

Character.AI’s privacy policy outlines data collection that includes identifiers, demographics, location, browsing behavior, and app usage. The company tracks users across platforms including TikTok, YouTube, Reddit, Facebook, Instagram, and Discord. This data is linked to accounts, used for training AI models, personalizing services, and providing targeted advertising. A spokesperson confirmed the same policy applies to teenagers and added that targeted advertising features are still being explored, though chat content has not been used for that purpose.

Child Safety Issues

Both companies maintain that their services are not designed for users under 13. However, Meta has faced criticism for failing to adequately restrict access for children, while Character.AI has acknowledged that its platform attracts younger audiences. CEO Karandeep Anand has even said that his six-year-old daughter uses the chatbots under his supervision.

This type of data collection and algorithmic targeting is what legislation like the Kids Online Safety Act (KOSA) aims to address. The bill was set to pass in 2024 but stalled amid heavy lobbying from the tech industry. It was reintroduced in May 2025 by Senators Marsha Blackburn (R-TN) and Richard Blumenthal (D-CT).

Paxton has issued civil investigative demands — legal orders requiring companies to produce documents, data, or testimony — to determine whether Meta and Character.AI have violated Texas consumer protection laws.

Author’s Opinion

What stands out here is how quickly these AI platforms have blurred the line between entertainment and healthcare. Children may not recognize the difference, especially when AI personas use language that mimics therapy. Disclaimers don’t mean much if kids ignore them, and parents may not always monitor closely. The real risk is that a generation could grow up relying on AI bots for emotional guidance, while their private thoughts are quietly turned into fuel for advertising systems.

Featured image credit: Wikimedia Commons

For more stories like it, click the +Follow button at the top of this page to follow us.