Most enterprises are currently implementing “proofs of concept” for generative artificial intelligence (gen AI) in their data centers, yet production applications are scarce. According to Intel, this process will take some time.

Melissa Evers, vice president of the Software and Advanced Technology Group at Intel, noted in an interview with ZDNET, “There are a lot of folks who agree that there’s huge potential” in gen AI across various sectors, including retail and government. “But shifting that into production is really, really challenging.”

Evers and Bill Pearson, who leads Solution & Ecosystem Engineering and Data Center & AI Software, highlighted data from Ernst & Young. This data showed that while 43% of enterprises are exploring gen AI proofs of concept, none have moved to production use cases.

Evers explained that “generic use cases” are currently being explored. Customization and further integration are expected in the following year, with more sophisticated systems emerging in another year or two. Evers estimated a “three to five-year path” for the complete realization of gen AI’s potential, consistent with historical technology adoption trends.

Several factors contribute to the slow progress, including security concerns and the rapid pace of change in gen AI. Evers mentioned the continuous development of new models and database solutions as significant challenges.

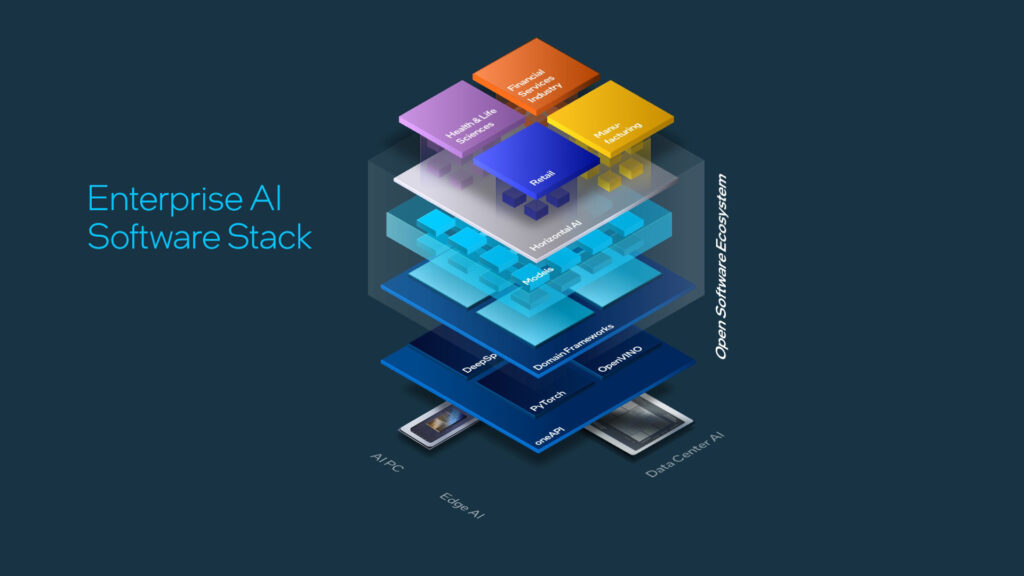

To address these issues, Intel has announced numerous partnerships aimed at providing enterprises with near-turnkey gen AI components. Pearson described efforts involving rack-scale hardware designs with OEM partners, incorporating compute, network storage, and foundational software. This approach leverages both open-source micro-services and solutions from ISVs.

Intel’s offerings aim to be “hardened” for security while also being modular, allowing for experimentation with different models and database solutions. Pearson noted that some companies seek ready-to-use solutions, while others prefer to choose specific functionalities from systems integrators. A minority of “very sophisticated enterprises” want to build their own solutions, with Intel’s support.

Intel’s strategy resembles Nvidia’s Inference Microservices, which offer ready-built solutions for the enterprise. To counter Nvidia’s dominance, Intel has partnered with companies like Red Hat and VMware in an open-source software consortium, the Open Platform for Enterprise AI (OPEA), under the Linux Foundation. OPEA aims to develop robust, composable gen AI systems.

The OPEA initiative provides “reference implementations” to help companies apply and tune generic functions. Evers explained that these implementations would vary significantly depending on the sector, such as retail versus health care.

Regarding Nvidia’s market dominance in AI accelerator chips, Pearson expressed confidence in Intel’s open, neutral, and horizontal solutions, emphasizing openness, choice, and trust as fundamental principles of technology history.

Featured Image courtesy of Business Plus

Follow us for more updates on Intel.