Nvidia has officially launched its new Rubin computing architecture, positioning it as the company’s most advanced platform for artificial intelligence workloads, with production already underway and a broader ramp-up expected in the second half of the year.

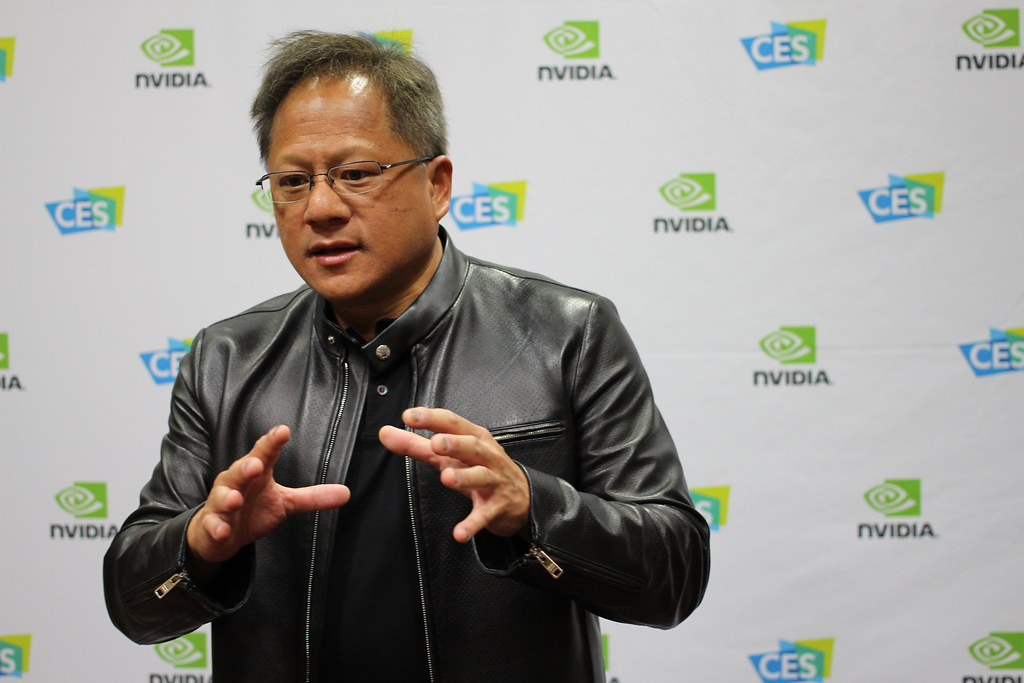

Speaking at the Consumer Electronics Show in Las Vegas on Monday, Jensen Huang said the Rubin architecture is designed to meet the rapidly rising computational demands of modern AI systems. He told the audience that the platform, named after astronomer Vera Florence Cooper Rubin, is now in full production.

Rubin was first announced in 2024 and represents the next step in Nvidia’s fast-paced hardware cycle. It is set to replace the Blackwell architecture, which itself succeeded the Hopper and Lovelace generations. This cadence has played a central role in Nvidia’s rise to become the world’s most valuable publicly traded company.

Adoption By Cloud Providers And Supercomputers

Rubin-based systems are already planned for deployment by nearly all major cloud providers. Nvidia said the architecture will be used by partners including Anthropic, OpenAI, and Amazon Web Services.

Beyond commercial cloud services, Rubin systems are also slated for high-performance computing projects. They will power HPE’s Blue Lion supercomputer and the forthcoming Doudna supercomputer at Lawrence Berkeley National Laboratory.

Architecture Design And Components

The Rubin platform consists of six separate chips designed to operate together. At its core is the Rubin GPU, supported by updates across storage, networking, and processing components to address emerging bottlenecks in large-scale AI systems.

Nvidia said the architecture introduces improvements to its BlueField data processing units and NVLink interconnect technology, aimed at easing constraints in data movement between chips. It also includes a new Vera CPU, which the company said is intended to support agentic reasoning workloads.

Dion Harris, Nvidia’s senior director of AI infrastructure solutions, said the design reflects growing memory demands driven by newer AI workflows. He pointed to increased stress on key-value caches used by large models to manage and condense input data.

Harris said Nvidia has added a new tier of external storage that connects directly to the compute device, allowing AI operators to scale storage capacity more efficiently as models take on longer-term and more complex tasks.

Performance And Efficiency Gains

According to Nvidia’s internal testing, Rubin delivers a substantial jump in performance over its predecessor. The company said the new architecture runs model-training workloads at three and a half times the speed of Blackwell and inference tasks at up to five times faster, reaching performance levels as high as 50 petaflops.

Nvidia also said Rubin improves energy efficiency, supporting up to eight times more inference compute per watt compared with the previous generation.

Growing AI Infrastructure Spending

The launch comes amid escalating global investment in AI infrastructure. Demand for Nvidia’s chips has surged as AI developers and cloud providers compete to secure computing capacity and the power-hungry facilities needed to run it.

On an earnings call in October 2025, Huang said Nvidia estimates that between $3 trillion and $4 trillion will be spent on AI infrastructure worldwide over the next five years, reflecting the scale of investment tied to the expansion of AI systems.

Featured image credits: Flickr

For more stories like it, click the +Follow button at the top of this page to follow us.